Stop Worshipping the False God of AGI

AI needs an Enlightenment in which we reject the pursuit of AGI and work instead to develop an AI-native economy

Peter Theil once remarked that crypto is “libertarian” while AI is “communist.” Theil meant that the drive to Artificial General Intelligence (AGI) requires enormous data and very heavy financial and computational resources, and will, if successful, concentrate enormous power in the hands of just a few companies or governments. In this view, AGI will produce profound changes in the economy that will be more far-reaching than the industrial revolution. If AGI becomes super-human and doesn’t decide to kill us all, the technology will supposedly single-handedly solve our economic problems, producing a utopian world in which there will be abundant goods and services available to all at little or no cost.

The belief that AGI will solve our fundamental economic problems is founded on the assumption that intelligence is the most important factor of economic production. AGI boosters believe that Large Language Models (LLM) are already smarter than we are—and getting ever smarter. Soon enough, we will have an army of AI agents whose cognitive abilities vastly surpass humans in every way. Once we put these experts in charge of our economic processes, we will reap a tsunami of economic productivity. If we want a new economic paradise, we must relentlessly pursue AGI by making the models smarter and smarter until they are better than humans at everything. We will have finally achieved Plato’s vision of being ruled by the wisest and the best, except our rulers will be algorithms.

But if LLMs are already so smart, why aren’t they rich? A recent MIT study, The GenAI Divide: State of AI Business 2025, found that only about 5% of AI pilots had any measurable value, that most economic sectors show little to no structural change so far, and that there have been no material employment changes. A study by the Economic Innovation Group confirmed that there has been no discernible effect of AI on employment. The biggest problem with AI, the MIT study found, is that it doesn’t integrate well into business workflows.

The MIT study doesn’t explain why the models are currently failing on real world tasks. The AGI boosters will no doubt retort that the models are not quite intelligent enough just yet. Give it a year or two and chatGPT 6 will raise the AI pilot success rate from 5% to 95%. But is insufficient intelligence really the reason the models are failing in real world business tasks?

We can answer that question by examining a simple business task that the models can’t perform. We’ll see that lack of model intelligence is not the explanation for the model’s failure. Vast intelligence is neither necessary nor sufficient to solve most business problems. The barrier AIs face is that the tools they now use to solve business problems are created by humans for humans and are inefficient for AI use. For AIs to be successful, the underlying infrastructure of tools, information, and coordination used in business processes must be changed to accommodate algorithms. Fortunately, the outlines of that AI economy are starting to develop. Once we have the pieces of an AI economy in place, we should start to see a higher AI pilot success rate, and, ultimately, an AI productivity revolution. We won’t need AGI.

Why Don’t AI Systems Integrate into Workflows Now?

Case Study

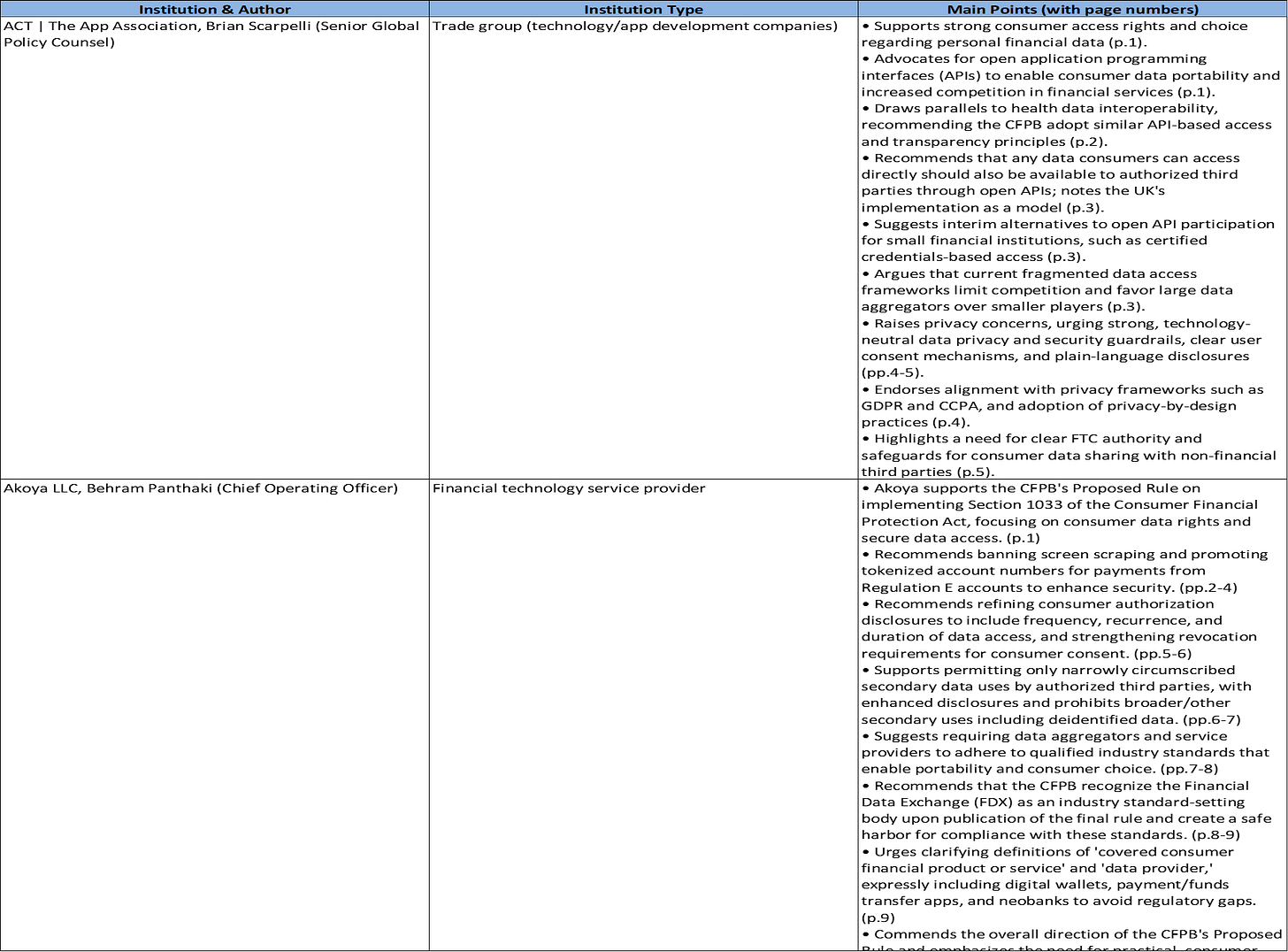

When a federal agency proposes a rule, it is legally required to seek public comments before it issues the final rule. Law firms and other interested parties like to keep track of the comments to understand views on proposed regulations to help them develop strategies for potential litigation.

To create a case study, let’s look at a proposed rule from the Consumer Financial Protection Bureau (CFPB) on personal financial data rights. Before the rule was finalized, the CFPB received 11,140 comments. The task for a human associate in a law firm might be to download and read all of the comments, determine whether they were written by someone representing an institution, keep only the institutional comments, and then assemble a summary of each of them in a table in excel. The first column in the table would contain the author and institution; the second column would contain the type of institution; the third column would contain a bullet point summary of the main points made in the comment with page numbers were the points could be found; and the fourth column would contain any mention of specific firms or institutions with page numbers where the references could be seen. Putting such a table together is probably a week and a half of full-time work for a human. If we could get an AI model to do it, the human associate would gain one and a half weeks to work on higher value projects, substantially boosting the law firm’s productivity.

Can chatGPT Just Do It?

A law associate confronted with such a task would likely start by seeing if chatGPT (or Claude) can do it right out of the box. I checked by sending a query to chatGPT with instructions on how to construct the table I wanted, including the web address where the comment letters could be downloaded. ChatGPT 5 has the ability to search the web, but it refused to download the comment letters. Instead, it asked me to download them myself. ChatGPT 5 informed me that if I could upload the letters, it would create the table I requested. But downloading 11,140 comments manually is a significant portion of the work I had hoped chatGPT would do.

Ever helpful, ChatGPT also offered to write a python script (python is a very commonly used computer language) I could run that would automate the comment downloads. But unless I’m an unusual law firm associate who is conversant with software code, the python script won’t help me.

I asked chatGPT why it would not download the comments itself. It answered that it was not given the ability to do general web scraping, which is not surprising at all. Web pages are designed to be read by humans. They are not designed to support bulk downloads of the information in them. Any mishap caused by chatGPT attempting to scrape a web page could result in the CFPB’s web server being overwhelmed, leading to potential reputational and legal risk for openAI. So, unless I’m willing and able to run chatGPT’s download code, I’ll have to stop right here. Making chatGPT more intelligent will not change anything; the smarter LLM will still not be allowed to perform general web scraping.

Using ChatGPT’s Suggested Code Solution

To see why only 5% of AI pilot projects are succeeding, it will be instructive to attempt to run chatGPT’s suggested code. Let’s pretend I’m an unusual law firm associate who knows his way around coding. I’ll take chatGPT up on its offer to write the download code for me.

Attempt 1: ChatGPT Code Fails

I ran chatGPT’s python code, but it found no comments on the website. I informed chatGPT and it thought about the problem, discovered an oversight in its initial attempt, and then re-wrote the code.

Attempt 2: ChatGPT Code Fails Again

I ran chatGPT’s new code and this time I got only ten links to comments. The links were incorrect also, but I didn’t tell chatGPT at this point. I asked it why it only got ten links when we know there are thousands of comments. chatGPT considered the question, discovered a further problem in its code, and then modified it.

Attempt 3: ChatGPT Code Fails Again

When I ran the code the third time, I still got only ten links. I told chatGPT that there were too few links and that the links did not return any comments. ChatGPT re-wrote the code a fourth time.

Attempt 4: ChatGPT Code Fails Again

On the fourth attempt, the code returned zero comments again. I knew what the problem was from the beginning, but I wanted to see if chatGPT could diagnose the problem on its own.

Let’s Try Another Way

chatGPT was having trouble fundamentally because the CFPB web page is designed for human convenience rather than LLM access. To make the web page interactive for human users, the web designers constructed the page so that humans could push buttons to go from one comment to the next. The buttons were implemented by loading javascript, a web language, directly into the browser, where it would be executed. The javascript would be loaded after a human accessed the web page with his browser, so chatGPT would not see the JavaScript code when it analyzed the web page. To programmatically access such a web page, it would be necessary to simulate a human pushing one button after the next to access each comment.

After some discussion with chatGPT, we decided it should re-write its code using a ‘headless browser’ that could simulate a human being pushing buttons on a web page. This is a common technique used to automate web pages, but after many attempts the LLM and I could not get it to work. So, I looked for another way to solve the problem of downloading the comments programmatically through python.

Maybe We Can Use the Regulations.gov API?

Looking through regulations.gov, I discovered it supports an API, an applications programmer interface, that provides a structured set of rules a human programmer can follow to download comments. The API was not very intuitive and needlessly complicated in my view, but I thought that won’t be my problem: I’ll just ask chatGPT to write the API code.

I explained to the LLM how the API worked and asked it to write the code. When I ran the new code, it didn’t work, issuing error messages I didn’t know how to fix. Each time I asked the LLM to fix the error and re-write the code, it failed again, with a different error message.

I Give Up. I’ll Write the API Code Myself

Rather than wrestling with chatGPT, I knew at this point it would be quicker for me to write the API code myself, using the LLM to suggest code snippets. Doing it myself, I got it done and was able to download some test comments programmatically. At last, we could go to the next step: chatGPT would classify the downloaded comments as being on behalf of an individual or an institution.

chatGPT failed on this step too. Looking at the results, I noticed that the LLM was doing a surprisingly poor job of classifying comments that were written on behalf of institutions. I pored over the code but couldn’t find a bug. Finally, I asked the LLM, “Why do you think you are not doing a good job of classifying the comments?” It responded by telling me there are inherent limitations to the ML classifying tools it was calling. Tools? What tools? I didn’t know it was calling tools behind the scenes. Another subtle gotcha. I was using the LLM in the first place because ML classifying tools don’t work well enough. I fixed the problem by adding to the prompt: “When you are classifying the author of a comment, do not call any tools but rather use your LLM judgment.”

Once the classification problem was fixed, the model still didn’t work properly. I wanted a formatted table with bullet points in excel, but an LLM can only output text. How do you go from text to a formatted table? It’s easy to format text that is written in JSON format, and LLM’s are very good at outputting JSON. JSON is a format that would indicate which text is supposed to go in which column of the table, how the text should be divided by bullet points, and so on. So, in the prompt, I asked the LLM to output its analysis of each comment in JSON so that I could format it in python to go into a spreadsheet table.

However, inexplicably the JSON output didn’t work correctly to create the table and the LLM was no help when I asked it what went wrong. Troubleshooting myself, I found that the LLM was inexplicably outputting a single character at the start of its JSON output and at the end, which caused the python code to get the table wrong. So, I simply asked the LLM in the prompt to only output JSON, with no miscellaneous characters. Now the table could be formatted correctly.

I ran the final python program, hoping it would look at all 11,470 comments and create a table with only the comments made on behalf of institutions. But again, inexplicably, the program just stopped running, even though it had gathered many comments. I did a lot of troubleshooting but couldn’t figure out why. chatGPT was nonplussed as well. Finally searching around regulation.gov, I found a FAQ that said that the API rate limited the requests for comments. After a certain number (much less than the 11,740 I wanted to process), the API shut down and couldn’t be used again until an hour had passed, when the next batch of comments could be downloaded. That would take forever. Regulation.gov was rate limiting bulk downloads to prevent its servers from being overwhelmed. OpenAI had been correct not to give chatGPT web scraping ability, since websites like regulation.gov are obviously concerned about their servers being inundated with download requests.

What Now?

Continuing to root around in regulations.gov, I discovered it supported a bulk downloading service. You apply for a key, and once you get it, you can request a bulk download of comments, with no rate limitations. Once you put in your request, regulations.gov will send you an email with a link you can use to download a table filled with information about each comment, and, most importantly, the link where each comment can be downloaded. At this point, I thought that asking the LLM to modify the code to use the emailed table of links would take longer than me doing it myself, so I did it. Finally, success—the tool worked. Here is a sample of the output.

Success at Last

This AI productivity application could easily be modified in many ways. Instead of displaying the summaries in table format, for example, they could be presented in a white paper.

The LLM Failed to Perform the Simple Task Because it was Using Tools Designed for Humans

I went through this excruciating detail to clarify how errors and problems will continually arise when you ask an LLM to autonomously automate business workflows. There are two factors that limit an LLM’s abilities.

Unlike humans, an LLM can only input and output text

LLMs lack their own tools and processes and are forced to use the human ones, which don’t work for them

To download the comments, chatGPT tried to use a web page that was designed for humans. Humans have eyes to see the page and fingers to push the buttons that download the comments. An LLM has no eyes or fingers. It can only output text and so its only option is to write code to perform the download programmatically. Because web pages are designed for people, openAI will not build bulk download capability into its LLMs. The alternatives offered by regulation.gov for bulk downloads, the API and the bulk download service, are also designed for human coders.

LLMs cannot solve problems robustly by writing code. There are too many gotchas—too many ways for the code to fail. If a human fails, other humans without special expertise can easily figure out what happened. AI experts are much less fault tolerant. When they fail, it’s the result of some highly technical problem that the average person will not be able to audit. AI experts must succeed almost always in the first attempt to be useful. If they don’t succeed initially, they generally can’t fix themselves and are very difficult to troubleshoot.

We should remember that the LLM failed to create the productivity tool by itself. I had to work closely with it, supervising it at every step. After going through this case study, it should be apparent why the success rate for AI pilot projects is so low currently.

To Succeed, LLMs Must Have Tools and Processes That Work for Them

LLMs only input and output text. They can write code, but code, whether written by humans or LLMs, usually has bugs. The more complex the business processes we want AIs to take over, the more complex the code they must write, and therefore the more likely the code will not work on the first attempt. LLMs should therefore not attempt to automate business processes by writing custom code on the fly. At a minimum, they need a solution that involves text but not code.

The framework that would provide LLMs tools and processes that work for them is just beginning to emerge. It has three proposed components:

Model Context Protocol (MCP) servers

The Agent-to-Agent (A2A) protocol

Networked Agents and Decentralized AI (NANDA)

These protocols decentralize LLMs in a division of labor, in much the same way humans cooperate by employing specialized labor in a market economy. The three components create an AI economy that is analogous to the human market economy.

MCP Servers

MCP servers provide the foundational tools in the AI economy. They are like the factors of production in the human economy.

Developed by Anthropic, MCP is an open standard designed to connect LLMs to data, applications, and tools in a consistent and scalable way. An MCP server simplifies what LLMs need to know to solve a problem. For example, if regulation.gov had implemented an MCP server, the LLM could have easily retrieved the comments without writing any code. ChatGPT would have queried the site (or a registration database) to find out what MCP servers might be available. When it discovered that regulation.gov had a server, it would have sent a text query asking about its capabilities and how it can use the server. The server would have responded by telling chatGPT how to issue a text request to retrieve comments. No coding is necessary. All the logic for implementing the retrieval of the comments is hidden behind the scenes in the server. The LLM does not have to know how it works.

To create the table, the LLM could use another MCP server that specializes in taking in text and returning a table to an excel spreadsheet. ChatGPT would have queried the server to find out what it can do and what text it needs to produce a table. Likely, the server would require that chatGPT send its output in JSON or some other structured format. Since we saw that chatGPT can hallucinate extra characters in its JSON output that would cause the server to fail, the server would likely check the input for valid JSON format and inform chatGPT if it hallucinated extra characters.

Armed with an array of MCP servers, an LLM could automate many tasks robustly. But MCP is just in its infancy, and there are competing standards. Regulation.gov has not provided an MCP server. Until MCP or some other robust, LLM-friendly toolset wins and is widely adopted, LLM automation of business processes will be limited.

A2A

The A2A protocol is analogous to the market system in a human economy. It allows multiple LLMs to communicate, share information, and divide tasks into pieces that specialist models can work on, just like the human division of labor in a market economy.

The case study we chose was relatively simple. With just a few MCP servers, an LLM could have automated the creation of the table, saving significant human time. But many business processes are much more complicated. MCP servers will not be enough. LLMs will likely need to call in specialized LLMs to solve specific problems.

NANDA

Nanda is analogous to the legal system in a human economy. Developed at MIT, it allows AI agents to find each other based on their capability or reputation. It supports market mechanisms, negotiation, and resolution of conflicts. It also supports full audibility, so that agents can check whether other agents completed their tasks as promised.

Major Implications of the Coming AI Economy

The use of specialized non-AGI models will favor open weight models. In an open weight model, the weights of the model, which can be tens or hundreds of billions of numbers, are publicly disclosed, allowing them to be modified as needed. The weights of an LLM are like the neurons in a human brain: they contain the knowledge and abilities of the LLM. If we want to change what the model knows or what it is good at, we can change the weights. Open weight models will therefore become at least as important as proprietary models. So far, China is in the lead in developing open weight models.

The AGI vision concentrates power, but a decentralized AI economy diffuses power. An AI economy will substantially reduce the risk of inordinate economic power being concentrated in a few corporations or governments that control the technology.

The AI economy will need a financial system so that AIs can pay each other for services. AIs will also have to pay MCP servers and NANDA components for legal services with remittances that are like taxes. Crypto is a natural fit for an AI financial system, especially when the AI economy crosses borders. The AI economy is thus the killer app for crypto.

The AI economy will need a human legal framework to interact with the human economy. AIs, for example, probably need to be legal persons in the same way corporations are so that they can enter into enforceable contracts, be sued and sue, and bring other legal actions.

The AI Productivity Revolution Does Not Depend on Achieving AGI

AI researchers have been worshipping the false god of AGI for decades now. But AIs don’t have to be better at everything any more than humans need to be. They just need to be better at their specialty. Being very good at a particular cognitive task, even better than all humans, does not require AGI. A group of highly specialized non-AGI models, working together in their own economy, will outperform any one model that tries to outperform in all areas. Instead of trying to surpass artificial model benchmarks by making the next foundation LLM model even bigger, AI needs an enlightenment in which researchers reject the pursuit of AGI and turn instead to creating the AI economy.

An excellent analysis. Your clearly expressed thoughts reinforce and add to what I have been arguing for years. I often point back to the 1980s and 1990s "IT productivity paradox" in which greatly increased computing power for years and decades stubbornly refused to show up in clear productivity increases.

I think you are correct in emphasizing the "AI economy" rather than a single, all-power AI. In fact, I'm currently writing partly about that for the upcoming Beneficial AGI Summit. This alternative view of what is likely and feasible (given LLMs and not a different approach such as "cognitive AI" or "neurosymbolic AI") has strong implications for AI safety discussions. I think it further underlines the implausibility of catastrophic AI scenarios.